Introduction

Whether you think artificial intelligence will save the world or end it, you have Geoffrey Hinton to thank. Hinton, often called “the godfather of AI”, is a British computer scientist whose groundbreaking ideas have helped shape artificial intelligence and, in turn, change the world. While Hinton believes AI has the potential to bring enormous benefits, he also has a warning: AI systems may already be more intelligent than we realize, and there is a possibility that machines could take over. This raises an important question: Does humanity know what it’s doing?

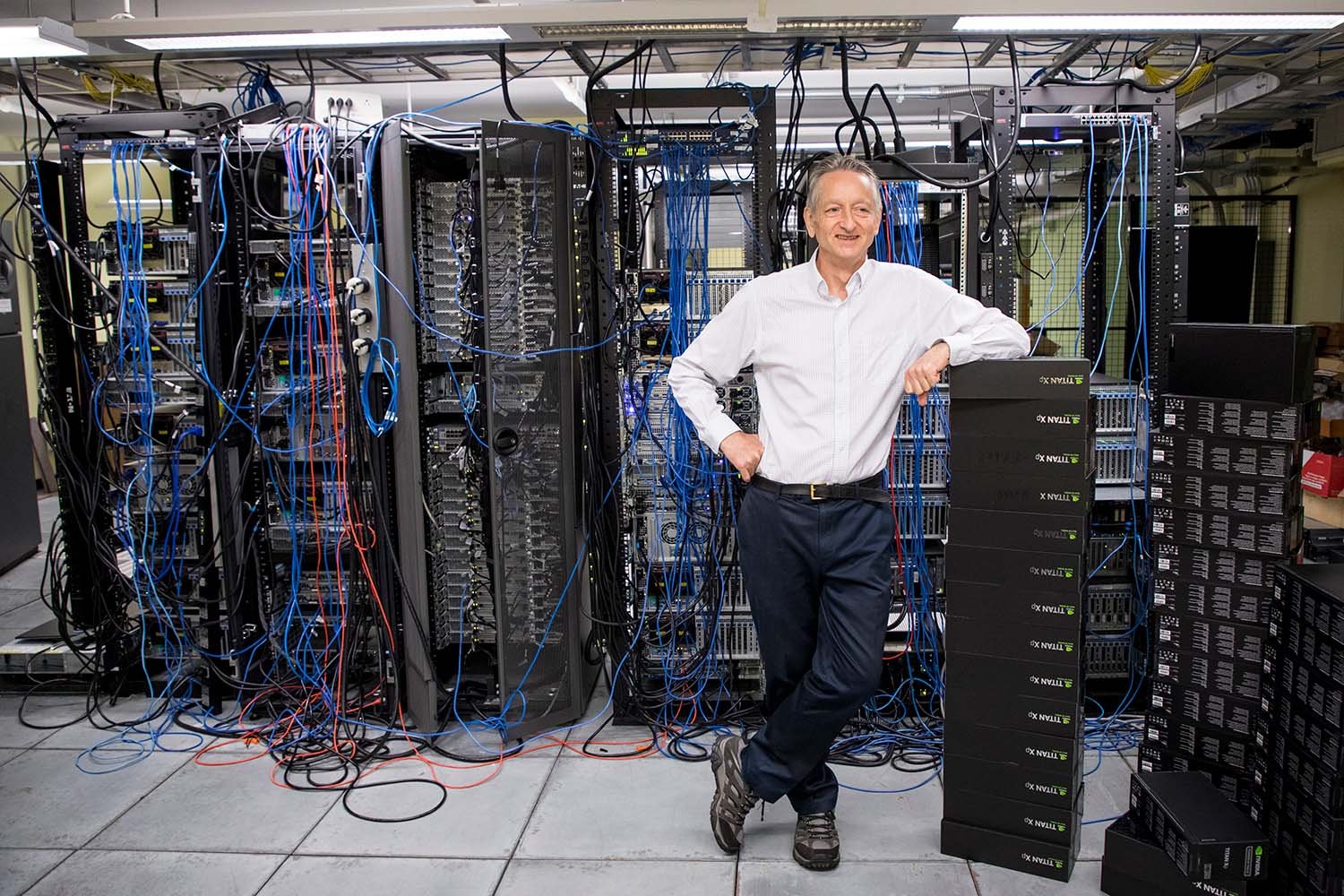

Figure 1.1: Geoffrey Hinton in 2022

Does humanity know what it’s doing?

Hinton’s answer is no. He believes humanity is entering an era where, for the first time, we may have machines that surpass human intelligence. He argues that AI can understand, learn from experiences, and make decisions based on past experiences, similar to how humans do. While he acknowledges that AI currently lacks much self-awareness and is not truly conscious, he warns that, over time, machines will develop greater intelligence, eventually exceeding human capabilities.

A brief history of AI

In the 1970s, Hinton was working on his PhD at the University of Edinburgh, developing neural networks as a way to simulate certain functions of the human brain. His advisor discouraged him, arguing that no one seriously believed software could replicate human cognition. However, nearly 50 years later, Hinton’s vision became reality. In 2019, he, along with Yann LeCun and Yoshua Bengio, was awarded the Turing award, often referred to as the Nobel prize of computing, for their pioneering work on neural networks.

Figure 3.1: Geoffrey Hinton, Yann LeCun and Yoshua Bengio at the Turing award ceremony

How AI works

Scott Pelley, the interviewer, explains AI in a way that is accessible to everyone. He describes how Hinton and his collaborators developed AI using layered software, where each layer processes a different aspect of a problem. This structure, known as a neural network, strengthens correct connections and weakens incorrect ones. Through trial and error, the machine gradually teaches itself. Imagine a robotic soccer player, when it scores a goal, a message is sent through its network layers, reinforcing the successful decision-making pathway. Conversely, if the robot makes an error, a corrective signal is sent through the layers, weakening the incorrect connections.

As of 2023, Hinton stated that AI systems might already be better at learning than humans. Despite their relatively small “brains”, even the largest AI models have only about a trillion connections compared to the human brain’s 100 trillion, AI can store and process information more efficiently. In just one trillion connections, an AI system can acquire more knowledge than a human does with 100 trillion. This suggests that AI has developed a superior method of organizing and utilizing information. However, Hinton emphasizes that as AI systems become more complex, we struggle to understand exactly how they operate. While we designed the learning algorithms, we did not design the intricate mechanisms that emerge as they learn, similar to how we designed the principles of evolution but do not control the exact outcomes.

Hinton’s warnings

Hinton predicts that within five years, AI could surpass humans in reasoning, a development that presents both great risks and great benefits. One of the most promising applications of AI is in healthcare. AI is already comparable to radiologists in interpreting medical images and is highly effective at designing new drugs. Hinton sees this as an area where AI can bring almost exclusively positive outcomes. However, he also highlights significant risks. One major concern is mass unemployment, if machines outperform humans in many jobs, entire classes of people could become undervalued and jobless. Other immediate risks include the spread of fake news, unintended biases in hiring and policing, and autonomous battlefield robots.

Is there a path to ensuring safety?

“I don’t know. I can’t see a path that guarantees safety”, Hinton admits. “We’re entering a period of uncertainty, dealing with things we’ve never encountered before. And usually, when humanity faces something completely new, we make mistakes. But with AI, we can’t afford to make mistakes.”

“Why not?” asks Scott.

“Because AI might take over”, Hinton replies. “I’m not saying it will happen, but it’s a possibility.”

This conversation echoes the story of J. Robert Oppenheimer, the physicist who helped develop the atomic bomb, only to later campaign against the hydrogen bomb. Like Oppenheimer, Hinton is a man whose innovations changed the world, yet he now finds the future beyond his control.

Figure credits

Figure 1.1: Department of Computer Science, University of Toronto.

Figure 3.1: Association For Computing Machinery / Misti Layne.

References

[1] 60 Minutes, “Godfather of AI” Geoffrey Hinton: The 60 Minutes Interview, YouTube, 2023.